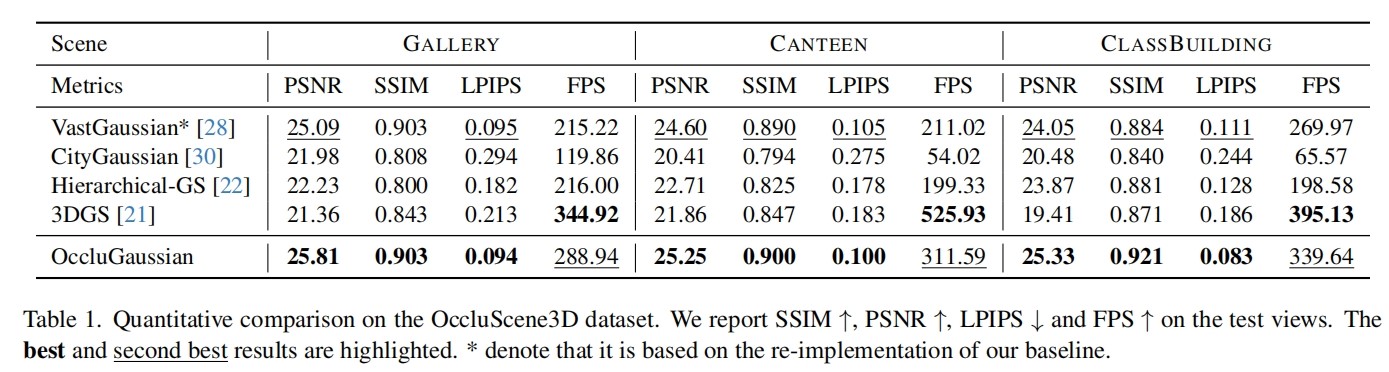

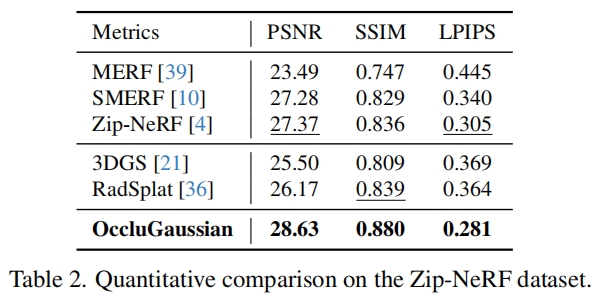

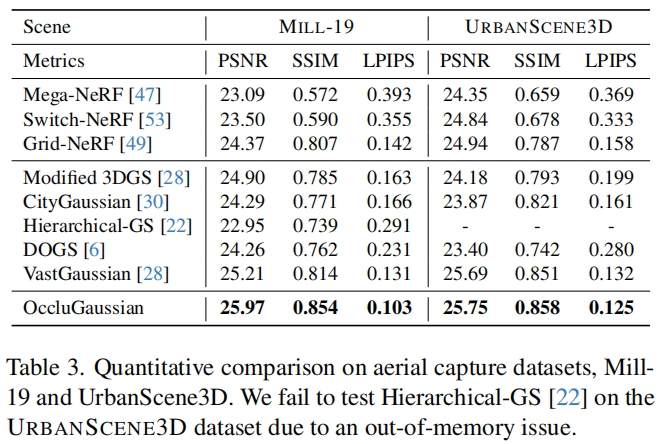

Overview

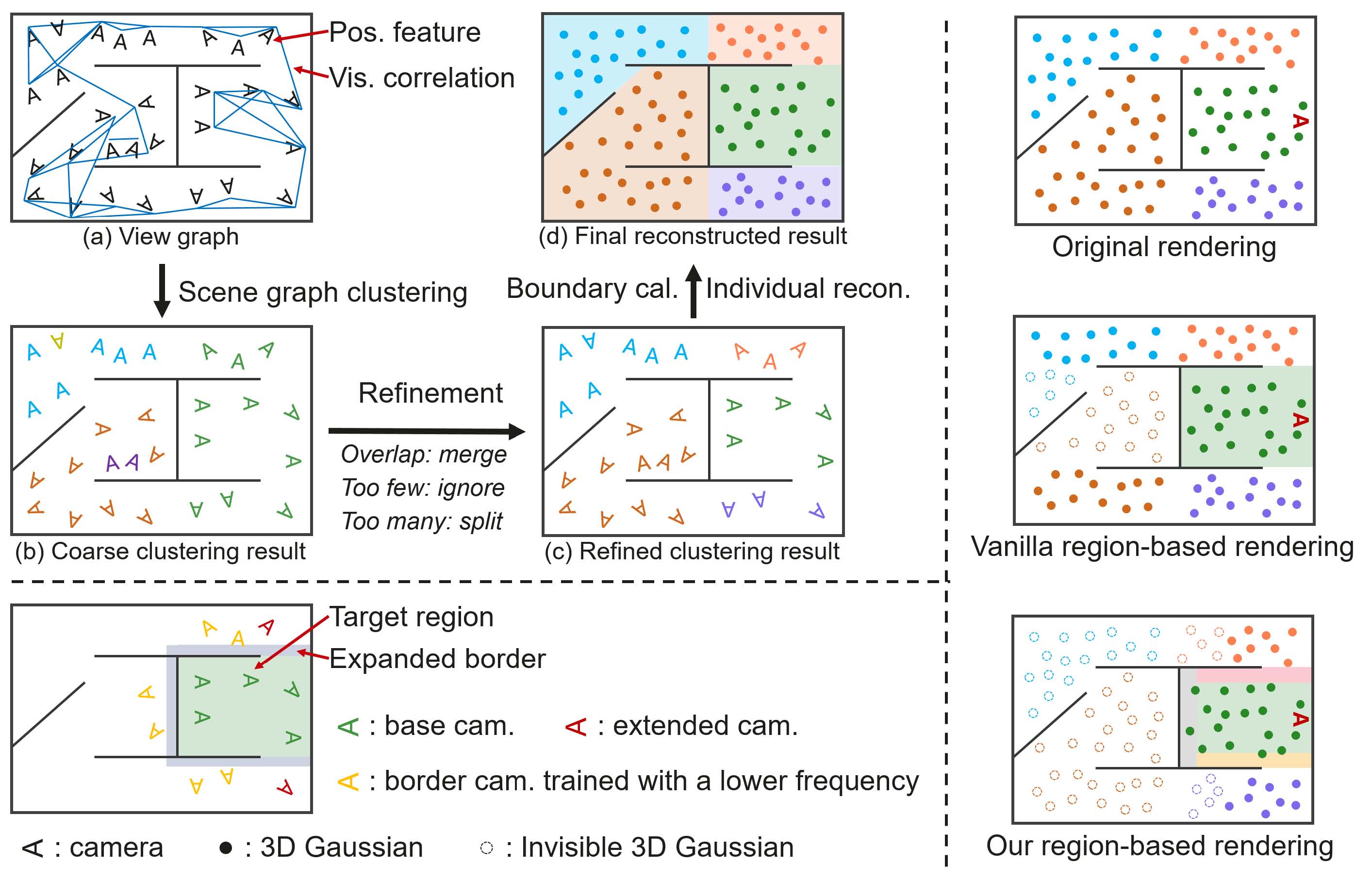

Overview of OccluGaussian. Top left: To reconstruct a large scene, we divide it into multiple regions by adopting an occlusionaware scene division strategy. (a) We first create an attributed view graph from the posed cameras, where nodes represent cameras with positional features, and edges represent visibility correlations between them. (b) A graph clustering algorithm is applied to the view graph to cluster the cameras into multiple regions, and (c) we further refine them to obtain more balanced sizes. (d) The region boundaries are calculated based on the clustered cameras. Each region is individually reconstructed and finally merged into a complete model. Bottom left: Each region is reconstructed using three sets of training cameras: base cameras located inside the region, extended cameras providing adequate visual content of the region, and border cameras used to constrain Gaussian primitives near the boundaries. Right: We introduce a region-based rendering technique, which culls 3D Gaussians that are occluded from the region where the rendering viewpoint is located. Furthermore, we subdivide the scene into smaller sub-regions with fewer essential 3D Gaussians. This approach effectively reduces redundant computations and further boosts our rendering speed.